Following the publication of my paper, I was invited to collaborate on yet another research. This time involving the detection of musical patterns in real time. The project was the initial step in a larger idea of an Internet-of-Musical-Things system. The idea was this: “a smart musical instrument, which is able to convert musical notes to MIDI signals in real time, and a pattern detection system, which is able to recognize pre-programmed patterns, in real time, and use that data to control peripheral devices such as stage lights or smoke machines”.

Upon discussion of potential workings, we decided to start things with a monophonic input stream – (monophonic = only a single musical note is played at a given time). We compared the workings of several methods of pattern detection – a deterministic system, a single neural network based system, and a multiple neural networks based system.

We introduced a novel method to represent MIDI notes within the context of pattern detection: Each note will be represented by a 3-element matrix, each element denoting time pitch, amplitude, and duration of the note. These attributes are sufficient for pattern detection. The pitch and duration is obtained from the MIDI metadata, and the amplitude is obtained using the velocity field. Refer figure below – Ci is the note, Pi is the pitch, and Ai and Di is the amplitude and duration respectively.

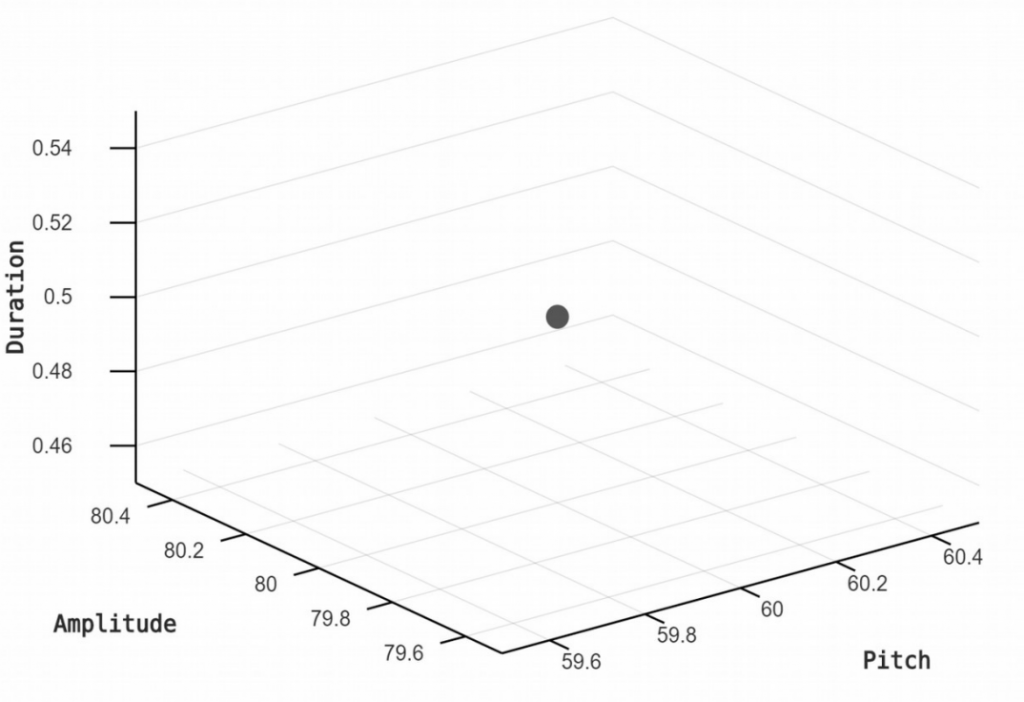

The complexity lies when tolerances are introduced. It is no surprise that human players will find it difficult to play the exact same thing always. Hence tolerances need to be introduced. Before introducing tolerances, a single note, represented by the three attributes discussed above, will be depicted in a 3-dimensional plane as below;

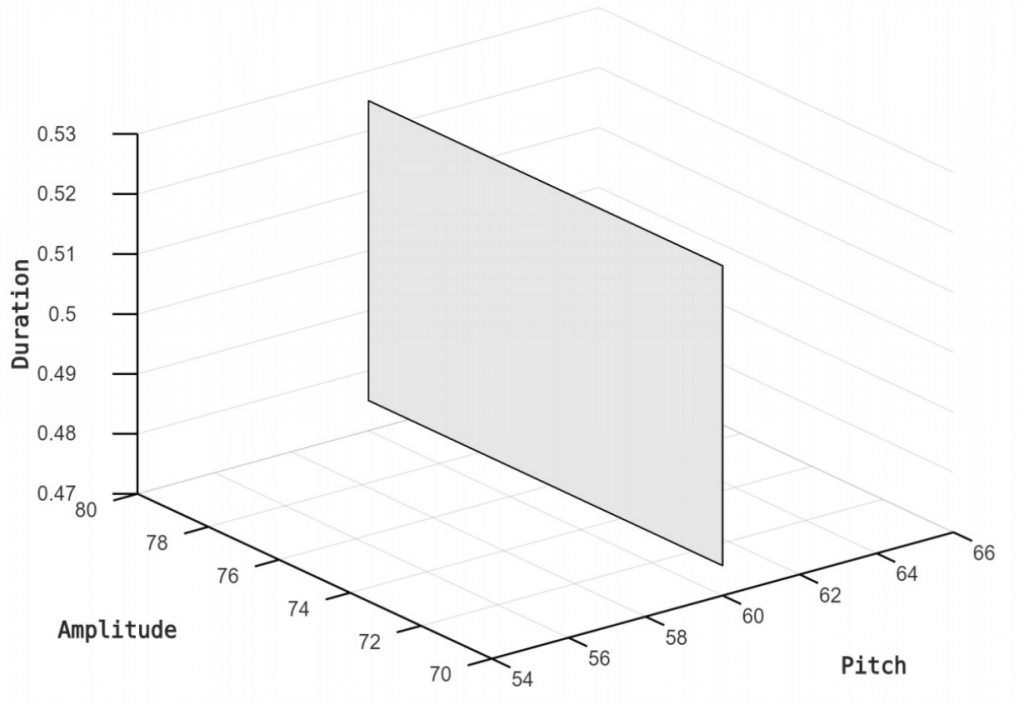

Now for tolerances! Each attribute, except for the pitch, will have a tolerance value. When the tolerances are introduced to the 3d plane, note will look like below;

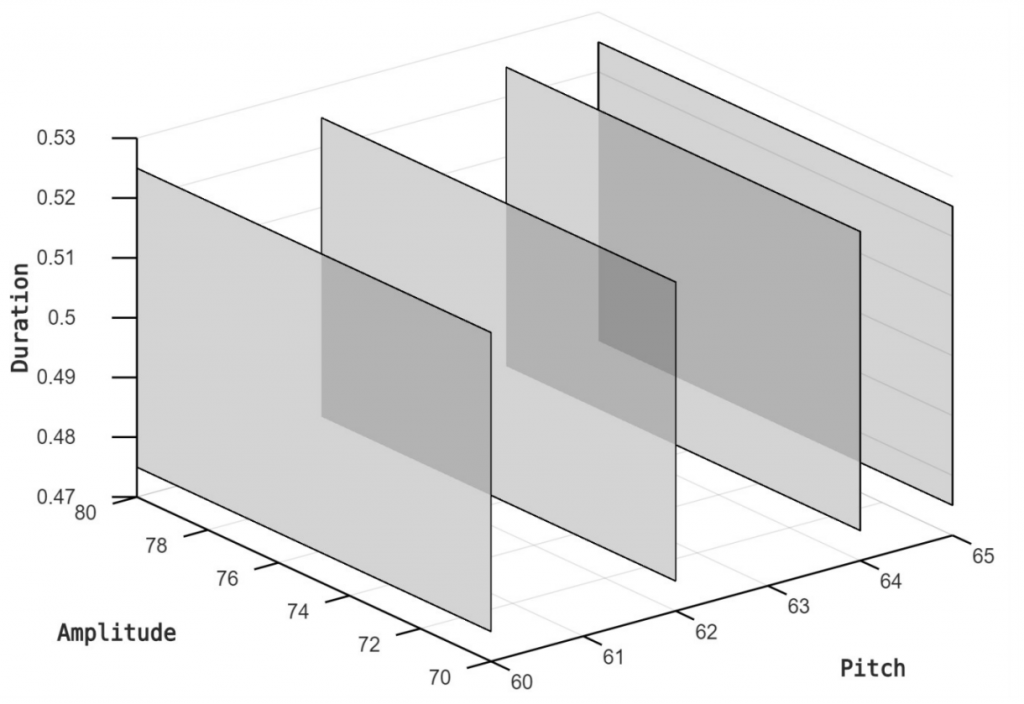

When the tolerances are defined, classifying patterns becomes much more harder. The following figure shows a pattern of 4 notes (C, D, E, F), plotted on a 3d plot. Patterns notes can occupy any location in the sample space, and therefore, creating classes for classification becomes that much difficult.

To overcome this, we compared the performance of several methods:

- A deterministic boundary checking method

- A single neural network based method

- A multiple neural networks based method

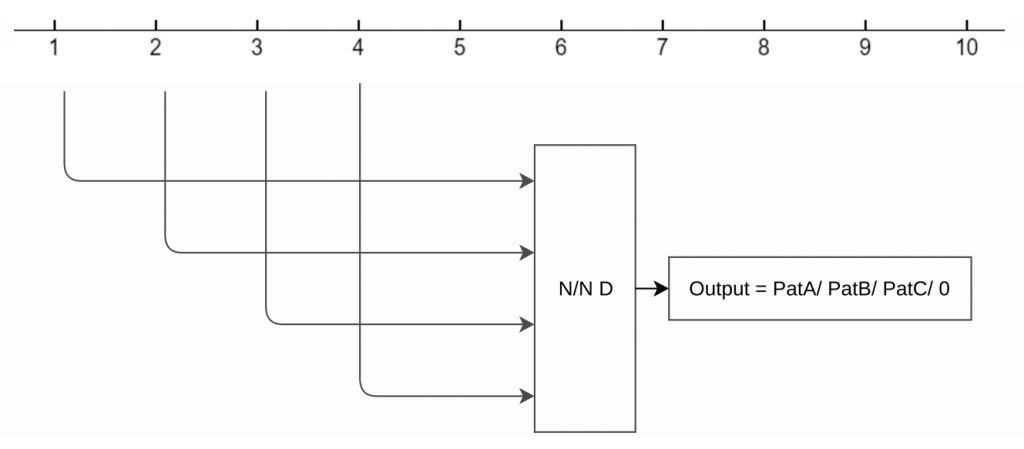

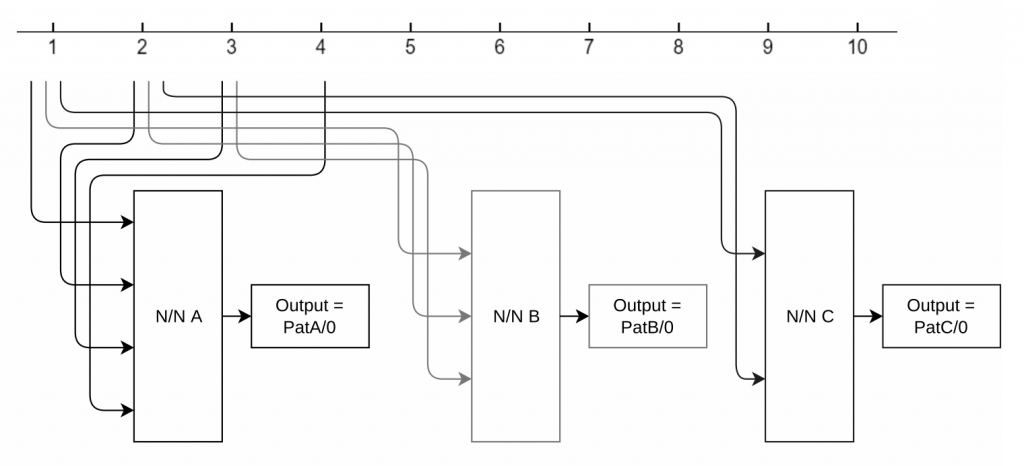

The deterministic system is a simple, boundary checking system which performs a cascading check if each note is within tolerances of a corresponding pattern note. The single neural network, as the name suggests, uses a single neural network trained to detect all patterns. Since patterns may be of different lengths, shorter patterns are padded at the end to equalize their length. The multiple neutral network based method uses a trained neural network per pattern. Such a system is possible sue to the relatively small number of patterns. The following figures show an illustration of a single and multiple neural network based methods.

We tested the programs by simulating a real-time musical stream, and the results were very promising. The running times lie in the order of milliseconds, and they prove that the system is able to work in real time.

We presented the research at the 1st Workshop of the Internet of Sounds, which happened alongside the 27th Conference of the FRUCT Association. The conference was to be held at the gorgeous town of Trento in northern Italy. But due to the outbreak of COVIS-19, the entirety of the conference shifted to a virtual mode. As a result, participants were asked to pre-record their presentations. Below is my presentation: Towards Real-Time Detection of Symbolic Musical Patterns: Probabilistic vs. Deterministic Methods.

The paper is published at the conference proceedings, and can be viewed through this LINK.

The source code for the project can be found HERE.

This research was done with the collaborative effort of:

- Nishal Silva, Independent researcher, Colombo, Sri Lanka.

- Prof. Carlo Fischione, KTH Royal Institute of Technology, Stockholm, Sweden.

- Dr. Luca Turchet, University of Trento, Trento, Italy.